Algorithm Versus Humanity

Social media platforms are rage farming for profit

Date episode published: 07-Jan-26

“Recommendations drive a significant amount of the overall viewership on YouTube, even more than channel subscriptions or search.” That’s according to YouTube’s own engineers, who stress just how central this machinery is to what gets seen, when and by whom. It’s clearly laid out on a transparency post on the YouTube blog site. (blog.youtube) But I can see it clearly in the stats of my two channels as well, Silicon Curtain and Silicon Wafers. What has resonance in the algorithm gets shown more, and videos that don’t fall into a vacuum of obscurity, getting low view counts, fewer comments, no revenue and few subscribers. It seems to me that this has nothing to do with content quality and everything to do with the near instantaneous emotional reaction a video thumbnail and title provoke. The result is a race to the bottom for content quality, and value. But there are other, more pernicious effects too. In this episode we unpack the cumulative, long-term damage that is potentially being caused by the extreme short-term mechanics of the Social Media algorithm, to mental health, to societal cohesion, to political and even to civilisation itself. Google’s mantra was ‘don’t be evil’ yet the grinding mechanisms of its algorithms could be encouraging deep societal ‘evils’.

See the full video episode:

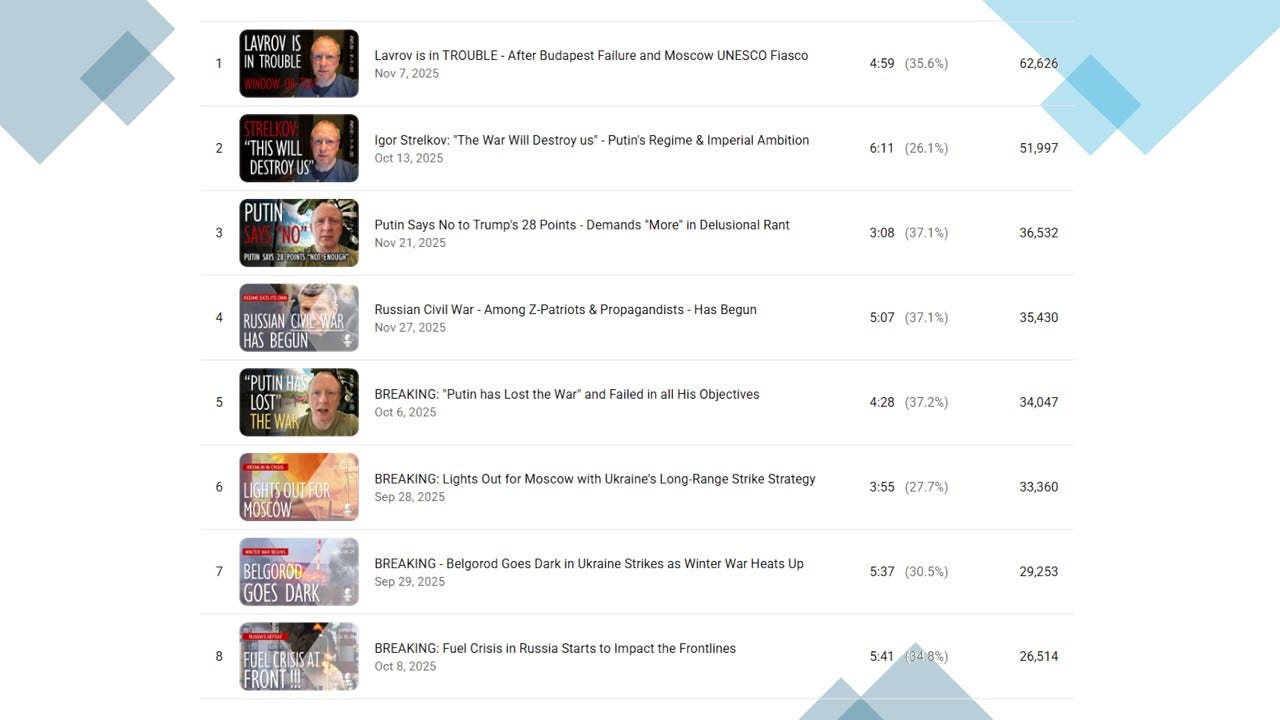

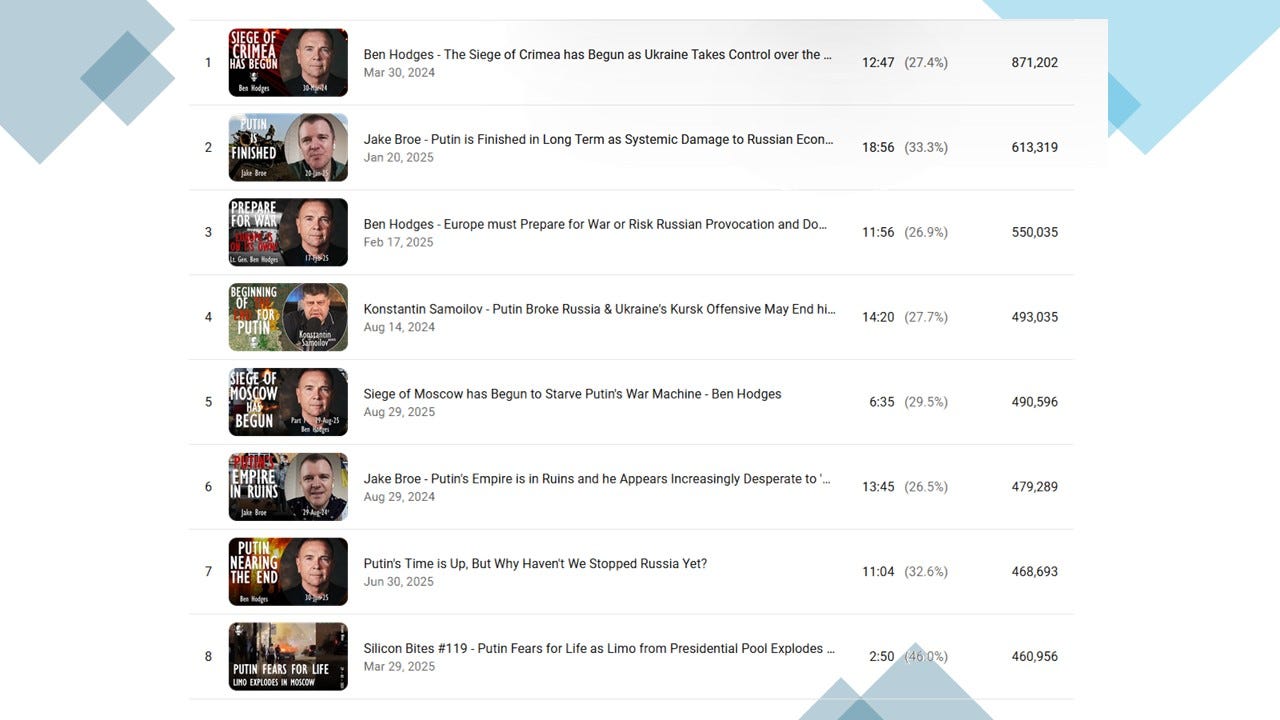

So what works, what performs best? And why. Stats from SC and SW.

Prioritising: emotional language. Cataclysmic headlines, that amplify doom and catastrophe and encourage exaggeration and simplification. That favour an optimistic and potentially distorted view of reality - i.e. your adversary is collapsing, on the verge of defeat, etc.

Thumbnails that include human faces and caricature expressions. Humour works well, and the capitalisation and underscoring of words, that emphasises recently - i.e. breaking news - emergency news flash, hot off the press kind of stuff. All eight of the top pieces play into the rage and recency algorithm, though are far from being the most egregious examples out there. Many creators play this up far more consistently and in a far more divisive way. But none of the content that is more balanced, contemplative or analytical is favoured amongst the top performers, even though most of the channel’s output fits into that pattern, rather than rage and recency baiting.

What is to be done? The algorithm seemed to change in mid-2025, becoming far more focused on instant gratification, and down weighting the impact of channel loyalty. I.e. recommendations favoured over subscriptions. The importance of external inputs seemed to increase, i.e. instant reaction and click-through from external platforms into YouTube seemed to provide a jog to the algorithm, boosting recommendations and elevating content.

It means that producing serious, analytical content and packaging it up in a more sober format will not be viable, either commercially, or in terms of building audience of having an impact. In 2026, the balance is moving towards most of the content becoming far more baiting, to simply remain viable, and it will favour shorter form content over long. Of course, packaging is more important than the content itself, but it means that more episodes will have to be reactive to short-term events, that play into the ‘wish fulfilment’ of the audience. Serious analytical content can still be sneaked in but will have to be packaged up in a much more sensational fashion, bordering on being deceptive, otherwise it will not be shown. It means that Patreon and Buy me a coffee become more important as means of financing, and other income streams, such as a book version of Silicon Curtain interviews, coming in 2026.

“Rage and Recency” - why algorithm mechanics are going only one way

The machine promises ‘User Satisfaction’, and social platforms are in the business of monetising that satisfaction, through mining the attention of users. Maximising the proportion of their lives that are spent online versus offline. This isn’t a side-feature, or a bug, this is the product’s fuel and engine, mining the attention hours of its users, in the way that The Matrix envisaged humans becoming batteries for some sentient machine civilisation. But in this version of reality, we are being click-farmed to generate advertising revenues.

The platforms want you to believe that the algorithm is here to help. It will give you more of what you want. Like a kid in a candy shop, with an infinite wallet, and no parental control. YouTube puts it plainly: “The algorithm has two goals” - “Help each viewer find videos they want to watch” and “Maximize long-term viewer satisfaction.” (Google Help)

The hidden tempo driving the need for ever more engagement to generate a profit, is rage and recency. Serving content that is ever more addictive, and which provokes an instantaneous emotional response, and needs to tap into viral behaviours, feed addictions to predictable content types rather than long-term loyalty to a channel, host or brand. In this way the algorithm has gone from encouraging innovation and diverse media product in the first years of the digital revolution, to now punishing innovation and experimentation. Some analysts have called this the ‘Enshittification’ of platforms, driven by a relentless commercial logic that becomes rapacious and extractive, to the detriment of its users and humanity.

The phrase ‘Enshittification’ was coined by Cory Doctorow and describes the three-stage decay of online platforms. They initially attract users with quality, then exploit users for business customers (advertisers), and finally exploit both users and businesses to extract maximum profit, leading to a decline in quality and eventual failure. In the early stages of the digital ecosystem, they are compelled to provide quality services and content, to build market share, in an environment of intense competition. But as markets mature and become saturated, monopolies are established within the ecosystem, and audience penetration reaches maximal levels, the commitment to quality is less important to retain market share.

This process is seen in streaming services (ads on premium), ridesharing (high fees), and search engines (more ads, less relevant results), where platforms prioritize short-term shareholder gains over user experience, turning users and businesses into mere resources to be squeezed, even when they pay. They stay, because there is no choice and competition is stifled. In video content platforms, all of this is true, but there is an additional mechanic which moves from light-touch ‘click farming’ of users, to an extremely rapacious algorithm which seems to maximise their attention, by prioritising content that appeals to pure emotions, rather than long-term loyalty factors. Rage and outrage; shock and controversy; extreme topicality and short lifespan of new content (typically 48 hours or less).

There are the Three Stages of Enshittification as envisaged by Cory Doctorow:

Attract Users: Platforms offer great value to users (e.g., free services, good content) to build a large audience.

Attract Businesses: Once users are locked in, the platform starts favouring business customers (like sellers or advertisers) over users, degrading the user experience.

Extract Value: The platform then exploits both users and businesses to maximize revenue, often by selling prime spots (like search results or playlist slots) to the highest bidder, leading to a worse service for everyone.

Examples include:

Social media: Facebook/Meta became less about connecting friends and more about pushing content from businesses and influencers.

Streaming: Netflix adding ads to its standard plan, forcing users to pay more to remove them.

Search: Google Search prioritizing ads and low-quality content over genuinely helpful results. While monopolising more of users’ attention with AI-led in-platform content.

Marketplaces/Delivery: Amazon and Airbnb increasing fees and adding ads, despite their dominant market positions.

In YouTube and other platforms that offers video content, this manifests when platforms push short-form content by shifting the algorithmic focus from serving users and creators to maximizing profit, leading to a degradation of the long-form content ecosystem and the erosion of stable channel brands. It disincentivises creators from building audience loyalty or innovating, and pushes them towards creating ephemeral, viral content, that repeats content themes and formats favourable to the algorithm.

Why this happens is down to some very simple mechanics, and the basic decision to serve share holder interests over those of users:

Two-Sided Markets: Platforms sit between users and businesses, holding both hostage; especially as traditional media markets such as newspapers are destroyed.

Monopoly Power: In dominant markets, there’s little incentive to maintain quality once users have few alternatives, and no avenues to express their discontent.

Short-Term Profit Motive: Executives prioritize immediate shareholder returns over long-term platform health. Short-termism permeates and degrades everything.

YouTube also says the system is “constantly evolving,” learning from an ocean of inputs - Help Centre documentation describes it as learning from “over 80 billion” signals, including watch history, subscriptions, likes, dislikes, “not interested,” and - crucially - “Satisfaction surveys.” (Google Help) But the inexorable direction of travel seems to be to favour short-form content over long, emotional responses over long-term impacts.

Here’s what that means in practice: the platform claims it’s not just chasing clicks or raw watch time; it’s also measuring how you feel after. Feel, rather than think is important. YouTube even describes using surveys to estimate what it calls “valued watch time,” because it doesn’t want viewers “regretting” what they watched. (blog.youtube) But is this borne out by the reality, the lived experience of users, where doom scrolling and dopamine addiction are becoming ever more dominating behaviours and potentially impacting mental health?

Because the algorithm doesn’t just rank content. It sets the tempo of your attention. Rage and recency, over thoughtfulness and insight. YouTube’s own explainer notes that a user’s customised homepage is a mix of subscriptions, personalized recommendations, and “the latest news and information.” (blog.youtube) That’s recency baked into the interface: what’s new, what’s breaking, what’s spiking. The system is structurally biased toward now - not because “now” is wise, but because “now” is measurable, now creates a sense urgency - Digital FOMO, and is much better material for attention mining and impulsive viewing. The appeal to ‘it can’t wait’, rather than ‘I’ll queue this for later.’ It’s measurable and profitable.

The truth is that even if YouTube’s formal goal is “long-term satisfaction,” the daily competition is for instant reaction - the click, the reflex, the surge of feeling that keeps you clicking from video to video, the digital interruption from reality, the amnesia of scroll bait.

The Outrage Mechanic: how engagement systems amplify rage

There’s a reason “rage bait” became a defining term. Oxford’s Word of the Year framing - reported by AP - defines rage bait as content “deliberately designed to elicit anger or outrage.” (AP News)

And the incentives are not subtle. Rage bait works because outrage is a high-octane fuel for the engagement economy: comments, shares, quote-posts, threads -activity the system can count. The research literature is increasingly blunt about this.

One pre-registered randomized experiment on engagement-based ranking found that compared to a reverse-chronological feed, Twitter’s engagement-based algorithm “amplifies emotionally charged, out-group hostile content.” (Knight First Amendment Institute)

Even more damning: the same work reports that users do not prefer what the engagement algorithm selects - suggesting the system can outperform your reflective preferences while still capturing your reactive attention. (Knight First Amendment Institute)

In other words: the platform can get more “engagement” by feeding you content you might say you don’t want, if you had more conscious choice over the options - because your nervous system clicks before your conscious mind can react. Now add social rewards.

A Yale study summary puts it starkly: “Social media platforms like Twitter amplify expressions of moral outrage over time” because users learn that this language gets rewarded with more “likes” and “shares.” (YaleNews) This in turn influences content creators, or those willing to trade quality for quantity, for them to gain and sustain large audiences.

A European Commission write-up of related findings goes further, quoting Yale psychologist Molly Crockett: “Amplification of moral outrage is a clear consequence of social media’s business model, which optimizes for user engagement.” (CORDIS)

Rage isn’t an accident. It’s a predictable output of simplistic reward loops - algorithmic and social. Loops that are tethered to commercial incentives and resist all attempts to place safeguards around the algorithms, or legislative restrictions of any kind. Old world media like television and newspapers were never allowed to operate in this laissez faire manner - but the oligarchs of the digital age have made vast investments in politics to ensure they are not limited in any way by legislative frameworks or regulations.

Now, let’s bring this back to YouTube specifically. YouTube insists it has guardrails - especially for news and information - and that it cares about responsibility. (blog.youtube)

But we repeatedly see how the platform environment can be exploited at scale, because “recommendations drive” viewership, and the power of brands and loyalty to channels is continuously undermined by the relentless cycle of the algorithm. (blog.youtube)

A recent UK example shows the dynamic in the wild: over 150 anonymous YouTube channels pushing fake and inflammatory anti-Labour content amassed nearly 1.2 billion views in 2025, according to research described by The Guardian. (The Guardian)

Reset Tech’s UK director Dylan Sparks gave a quote that should be printed on the wall of every regulator: “Malicious actors are permitted by YouTube to spread synthetic ‘news’ that disrupts political debate in the UK…” (The Guardian) Yet this is happening on a global scale.

The platforms may not be engaged in ideological warfare as a motive, but they are enabling an environment that favours actors that are purposefully manipulating content for partisan political objectives and those scaling rage bait and misinformation for opportunistic grifting. The mechanism is the same: a system that rewards attention harvesting at industrial scale, and enforces no real rules around quality or objectivity, will attract people who manufacture attention by any means available. Lies not only spread faster in this environment but are easier to manufacture at scale than truthful, well researched, reality-based content.

Geopolitical and civilizational consequences

Algorithms optimized for instantaneous emotion produce societies that are easier to destabilize - because strategic thinking requires time, context, memory, and restraint. And “rage and recency” is engineered to dissolve all four. You can see the political effect in controlled settings.

A Guardian report on a large experiment involving X’s “For You” feed describes how barely perceptible boosts to partisan, anti-democratic content produced measurable increases in hostility - while also spotlighting the underlying business logic: interventions that down-rank divisive content, that respond to rage with rationality, can reduce engagement volume, which “poses challenges for engagement-driven business models,” reinforcing the hypothesis that “content that provokes strong reactions generates more engagement.” (The Guardian)

That is the trade-off in one sentence: quality content is seen as antithetical to engagement revenue. Now, mental health and cognition.

The U.S. Surgeon General’s advisory doesn’t claim every user is harmed because of doom scrolling addiction. It does say something concerning though: “At this time, we do not yet have enough evidence to determine if social media is sufficiently safe for children and adolescents.” (HHS) This led to Australia implementing a world-first ban in December 2025, preventing children under 16 from holding accounts on major social media platforms like TikTok, Instagram, Facebook, Snapchat, X, and YouTube to protect them from harms like cyberbullying and mental health issues. Though users can still view public content, they cannot post or interact; platforms face huge fines for non-compliance.

The U.S. Surgeon General’s advisory adds that while there may be benefits for some, there are “ample indicators” of “profound risk of harm” to youth mental health and well-being from excessive exposure to social media content. (HHS)

If you build a media environment that continuously rewards high-arousal reaction, you shouldn’t be surprised when anxiety rises, attention fractures, and political discourse becomes something of a blood sport. Let’s translate this into state capacity:

Democracies become more impulsive and more polarised, with shorter policy horizons and lower tolerance for complex trade-offs. The simplification of political discourse tends to favour populist politicians and grifters over the longer-term, people who promise unrealistic, but simplistic solutions to complex problems.

Authoritarian states exploit this asymmetry: they don’t need to “convince” everyone; they only need to flood the zone with emotionally optimized content, accelerate distrust, and make consensus and coherent strategy politically unattainable.

Information operations don’t require perfect lies; they require inflammatory feelings - fear, anger, humiliation, disgust, panic - delivered with ruthless accuracy to users.

The “civilizational degradation” question is a straightforward systems claim: if your information environment consistently selects for reflex over reflection, society becomes less capable of long-term coordination, less cohesive, less realistic, less solution oriented.

What are the solutions to this? They will not be easy, or necessary popular:

- Break the monopolies of the core platforms is the initial priority. Introduce more competition, more choice, to reverse the trend of enshittification. Allow users to export their profiles and content to competing platforms, rather than forcing them to be locked into monopolistic platform providers.

- Stop pretending that a fully libertarian approach to digital media increases freedom - the evidence shows that it does not and contributes to pollution of the ecosystem.

- Enforce freedom of expression for individuals - apart from clearly defined illegal hate speech - become more tolerant of individual freedom of expression. BUT clamp down hard on anything that distorts or manipulates the common digital space:

- This includes a crackdown on non-human avatars and bots - rooting out those entities that deform the public information space, through scaled and weaponised AI clones, bot accounts and paid actors injecting non-organic content and reactions into media.

- Clamp down hard on artificial distortions of content popularity through interactions by non-human and commercial entities. I.e. eliminate the deforming power of Paid Speech agents as opposed to the free speech of individual citizens.

- Preserve the sovereignty of content, by ensuring that malign foreign actors cannot hijack a platform and its content to change public opinion, or try to weaken their adversaries, as Russia and China seem to do. This is especially important as the rise of AI will dramatically reduce the cost of creating such manipulative content at scale.

- Regulate the algorithms to minimise attention farming and define a public interest aspect to the social media sphere, where pure profit motive and attention harvesting cannot be the prime directive. Balanced mental health must become a consideration.

- Strength the laws on digital libel and defamation - to introduce some accountability for online content creation, to create parity with traditional media formats.

- Demand auditability and transparency. The EU’s Digital Services Act explicitly targets recommender-system accountability - audits, risk assessments, researcher access - because these systems shape what people see and how they think. (Interface)

- Ensure that short-term algorithmic biases are balanced with longer term signals - to ensure longer form (more considered content) is not squeezed out by short-form. This will help to increase channel loyalty and encourage more innovation and investment.

- Legislate to ensure a level playing field - where platform owners cannot stack the dice, that is manipulate algorithms to suppress one political view over another, a down weighting that is apparent on X, and possibly other platforms, whether that be the suppression of left or right wing views, or of specific communities such as the pro-Ukraine lobby. Ensure the same rules without fear or favour, and without meddling.

If we can’t fine-tune the machinery that governs public attention, you won’t have a functioning public sphere. If short term commercial incentives are dominance, then instead of a public marketplace of ideas and free speech, we will be left with a casino, where the only currency transacted is rage and clickbait, with attention spans spiralling ever downwards.

Brilliant breakdown of how engagement-based algorithms have warped online discourse. The concept of 'enshittification' really captures what many creators have been experiencing but couldnt quite articulate. Platforms started by rewarding quality to build audiences, then shifted the game entirely once everyone was locked in. The most troubling part is how these systems actively punish thoughtful, nuanced content in favor of instant emotional reactions, basically training entire communities to communicate through rage instead of reason.